📌 What This Blog Post Covers

This post is designed to be practical from start to finish. I wrote it for anyone who wants to A/B test Shopify popups in a clear, structured way without turning the process into something overly technical or time-consuming.

As you go through the guide, you’ll learn:

- 🧪 What A/B testing actually means when applied to Shopify popups

- 🛠️ How to set up and run a popup A/B test step by step

- 🎯 Which popup elements are worth testing first (and which usually aren’t)

- ⏱️ How long to run tests and how to read results without jumping to conclusions

- 🔌 Which tools support real A/B testing for Shopify popups and how to choose between them

- ⚠️ The most common mistakes that lead to misleading results

- 📊 Real, data-backed case studies you can learn from

- 🔁 How to turn A/B testing into an ongoing improvement process instead of a one-time experiment

The goal isn’t just to help you run a test. It’s to help you understand why certain popups perform better than others—so every change you make is intentional, measurable, and easier to repeat.

🔬 What Is A/B Testing?

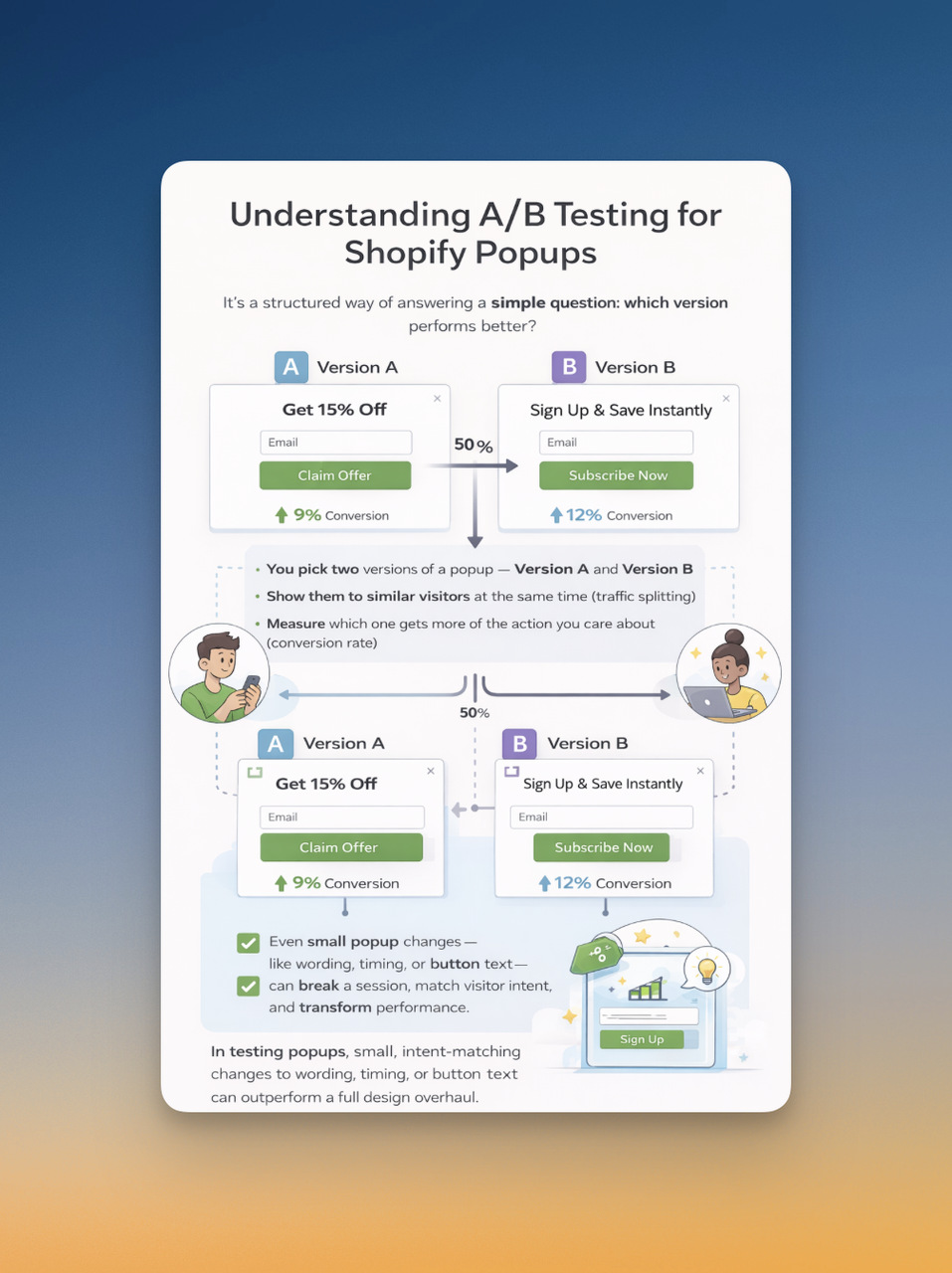

At its core, A/B testing is just a structured way of answering a very simple question: which version performs better? You take two variations of the same thing—Version A and Version B—show them to similar users at the same time, and measure which one gets more of the action you care about. In my experience, A/B testing only sounds intimidating until you realize it’s really just controlled comparison, not complex math.

What makes A/B testing Shopify popups slightly different from testing a landing page or a product page is intent and timing. Popups interrupt a session by design, so even small changes, like wording, delay, or button text, can dramatically affect results. I’ve seen cases where changing just one line in a popup headline outperformed a full design overhaul, simply because it matched visitor intent better at that moment.

🧠 How A/B Testing Actually Works

Here’s how I explain it to anyone new to this:

- You create two versions of the same popup

- Only one element changes (headline, CTA, offer, timing, etc.)

- Traffic is split between the two versions

- You track a single primary metric (usually conversion rate)

- The better-performing version wins—or teaches you something

That’s it. No magic.

What does matter; and this is where people often slip—is discipline. A/B testing only works if:

- Both versions run at the same time

- Traffic is split randomly

- The test runs long enough to be meaningful

Change too many things at once, stop the test too early, or rely on “it feels better,” and you’re no longer A/B testing, you’re just experimenting blindly.

⚖️ A/B Testing vs Multivariate Testing

I want to clear this up early, because I see confusion around it all the time.

For Shopify popups, A/B testing is almost always the better choice. Most stores simply don’t have the traffic volume needed to test multiple variables at the same time reliably. In my experience, single-variable A/B tests are faster, cleaner, and lead to clearer decisions.

🛍️ What Makes Popup A/B Testing on Shopify Unique

Popup A/B testing isn’t just about conversion rate, it’s about balance.

You’re testing:

- Attention vs interruption

- Incentive vs trust

- Urgency vs annoyance

That’s why popup A/B tests often focus on micro-elements:

- One line of copy

- A button label

- A delay of a few seconds

- An exit-intent trigger instead of scroll-based

These small changes matter more in popups than almost anywhere else on a Shopify store.

Now, let's move into the practical part and break down exactly how I A/B test a popup on Shopify, step by step, starting with goal-setting and metrics.

🧪 Step-by-Step: How to A/B Test a Popup on Shopify

This is the part where most guides either overcomplicate things or skip the thinking entirely. I’ll walk you through exactly how I A/B test Shopify popups, step by step, without turning it into a CRO thesis.

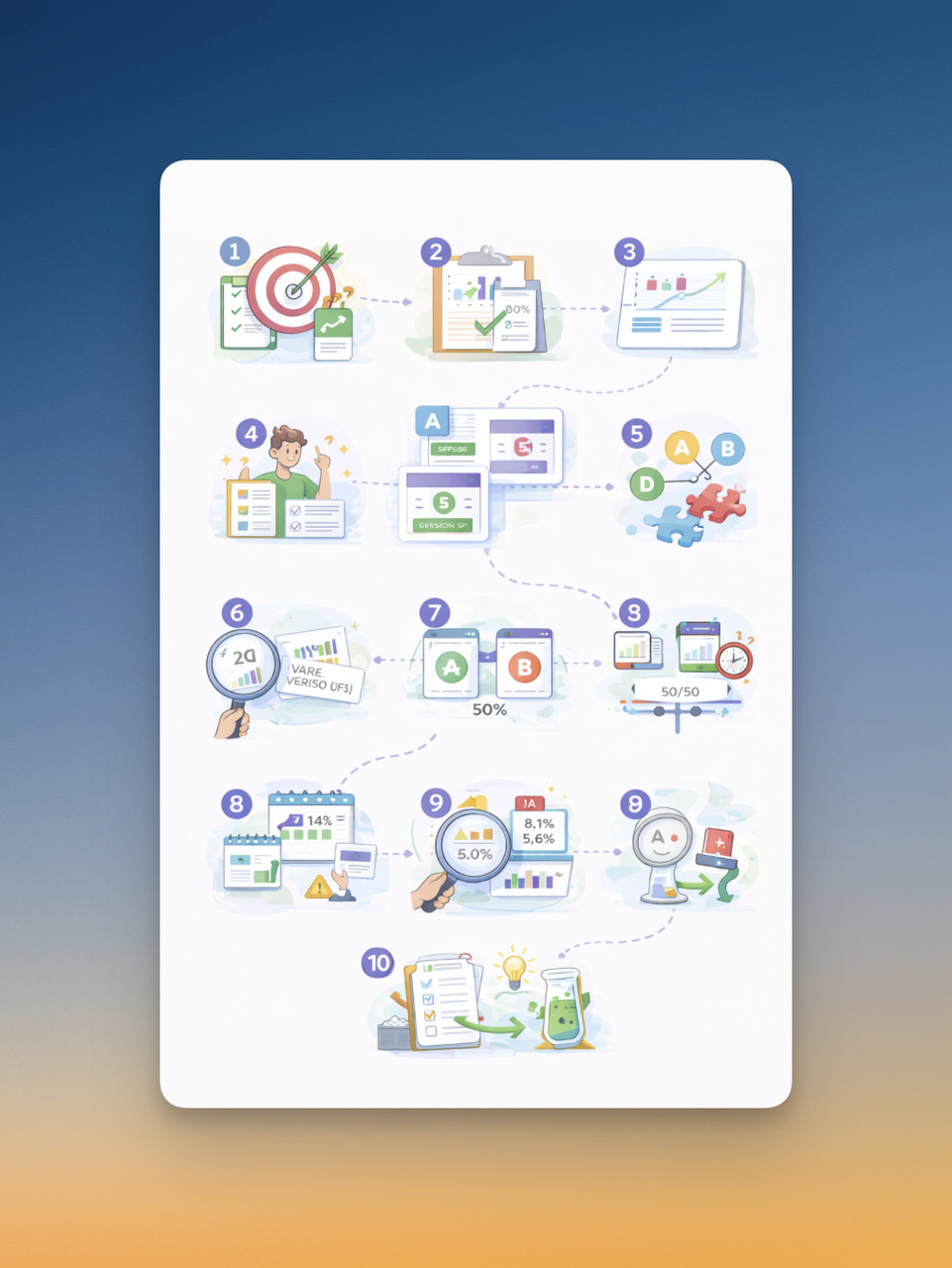

🎯 3.1 Define Your Goal

Before I touch copy, colors, or triggers, I ask myself one simple question:

│ What do I want this popup to achieve?

If you don’t answer this clearly, your A/B test will be noisy no matter how “clean” the setup is.

Common popup goals I work with:

- Email signups

- SMS opt-ins

- Discount code claims

- Click-throughs to a collection or product

- Reduced exit rate (for exit-intent popups)

👉 Rule I follow: one popup = one primary goal.

📊 3.2 Choose the Right Metric to Measure Success

Once the goal is clear, the metric becomes obvious. I never track everything at once—just the one number that reflects success.

Tracking too many metrics at once makes it harder to decide a winner. I’d rather be decisive than “data-rich.”

📉 3.3 Establish a Baseline Performance

Before running any A/B test, I always check:

- Current conversion rate

- Average daily impressions

- Average daily conversions

Why? Because without a baseline, you don’t know whether:

- Version B is actually better

- Or Version A was just having a bad week

Even a 7–14 day baseline is enough to anchor your expectations.

🧠 3.4 Create a Clear Hypothesis

This is where most popup tests quietly fail.

A good hypothesis is not:

“Let’s see if this converts better.”

A good hypothesis connects a change to a reason.

Here’s the structure I use every time:

If I change X, then Y will happen, because Z.

Examples:

- If I change the CTA from “Submit” to “Get My Discount,” the conversion rate will increase because the value is clearer.

- If I delay the popup by 10 seconds instead of 3, opt-ins will improve because users have more context.

If I can’t explain why a change might work, I usually don’t test it.

🧩 3.5 Decide What Popup Element to Test

I strongly believe in one variable per test. Especially for Shopify popups.

Here are the elements I test most often, in priority order:

- Headline & copy (highest impact)

- CTA button text

- Offer or incentive

- Timing & trigger

- Design or visuals

What I avoid early on:

- Testing multiple elements together

- Drastic redesigns

- “Let’s change everything” tests

Small, focused changes win more often than big creative swings.

🧱 3.6 Build Version A vs Version B

This part should feel boring—and that’s a good thing.

- Version A = your current popup (control)

- Version B = same popup, one change only

I always double-check:

- Same trigger

- Same audience

- Same device targeting

- Same traffic split

If anything else changes, the test becomes unreliable.

🔀 3.7 Split Traffic and Launch the Test

For most popup A/B tests, I use a 50/50 traffic split. It’s simple and removes bias.

Before launching, I run a quick checklist:

- ✅ Both versions published

- ✅ Tracking working

- ✅ Conversion event confirmed

- ✅ No overlap with other popup tests

Overlapping tests = messy data. I avoid it whenever possible.

⏳ 3.8 How Long I Let a Popup A/B Test Run

This is one of the most common questions I get.

My rule of thumb:

- Minimum: 7 days

- Better: 14 days

- Even better: full business cycle (including weekends)

I never stop a test just because:

- Version B “looks better” after one day

- I’m impatient

- Stakeholders want fast answers

Early results lie more often than they tell the truth.

📈 3.9 Analyze Results and Pick a Winner

When I analyze results, I look at:

- Conversion rate difference

- Total conversions (not just percentages)

- Consistency over time

If Version B wins clearly, great.

If results are close, I ask:

- Is the traffic volume enough?

- Was the hypothesis strong?

- Should I iterate instead of declaring a winner?

Not every test needs a dramatic outcome to be useful.

🔁 3.10 What I Do After a Test Ends

This is where real optimization happens.

After a test, I usually:

- Roll out the winning version

- Document the result (what changed + why it worked)

- Use that insight to plan the next test

A/B testing Shopify popups isn’t about finding the perfect popup.

It’s about building a learning loop that keeps improving performance over time.

🛠️ Tools & Apps for A/B Testing Shopify Popups

Choosing the right tool is one of the most overlooked parts of A/B testing Shopify popups. In my experience, many popup tools look similar on the surface, but only a portion of them actually support real A/B testing with proper traffic splitting and reporting. If the tool doesn’t handle the experiment logic for you, the test usually ends up biased or abandoned.

🔌 Popup Tools That Support A/B Testing on Shopify

Not every popup app allows you to A/B test popups properly. Below are tools that do support popup-level A/B testing on Shopify and are commonly used for conversion optimization.

The key thing I look for here isn’t feature count—it’s whether the tool lets me change one variable, split traffic evenly, and track conversions clearly.

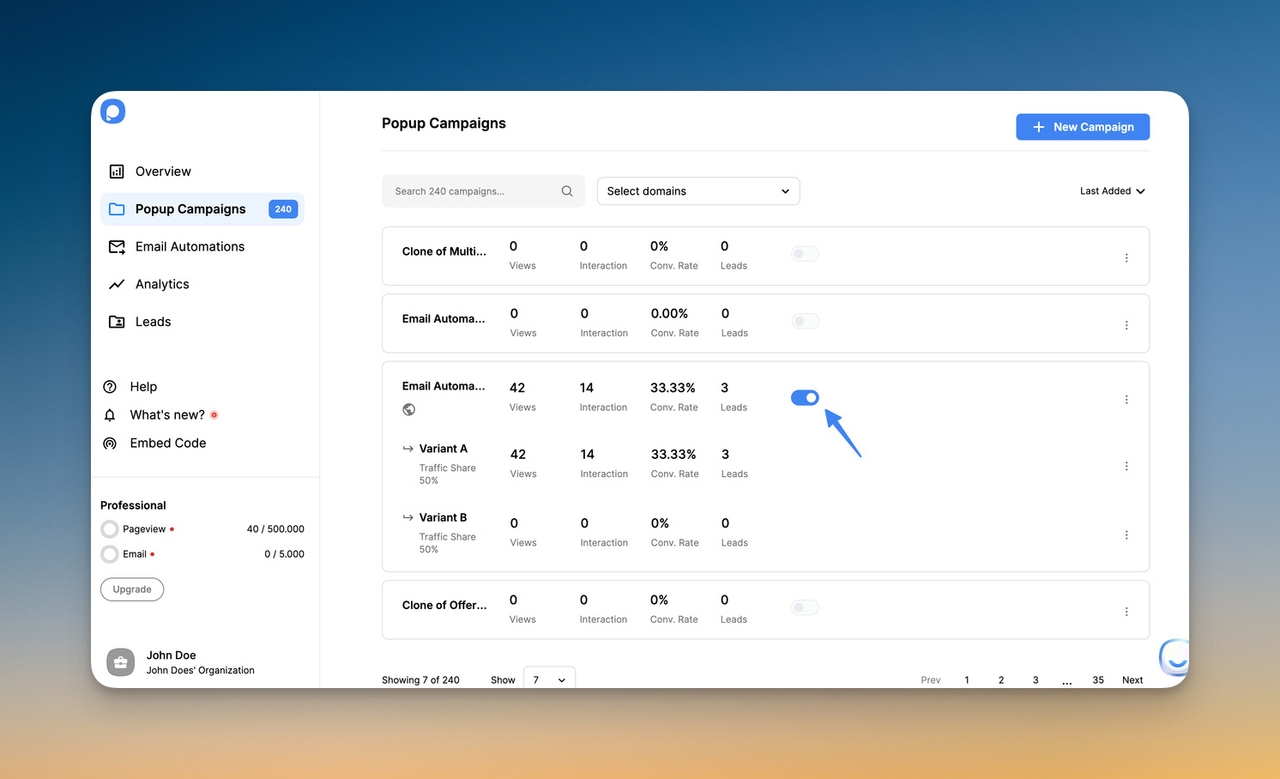

📌 Spotlight: Popupsmart and A/B Testing on Shopify

I want to call this one out because it’s often overlooked but actually gets the job done: Popupsmart supports A/B testing for Shopify popups in a straightforward, no-nonsense way.

What Popupsmart lets you do:

- Create multiple variations of the same popup

- Split traffic between versions (e.g., 50/50 or custom ratios)

- Test copy, CTA text, visuals, triggers, and targeting

- See performance metrics for each variant inside the dashboard

What I appreciate most about Popupsmart is how easy it is to spin up variations and run experiments without jumping through technical hoops. If you want to test two headlines, different discount offers, or even timing triggers without custom scripts, Popupsmart makes that process accessible, especially for merchants who aren’t full-time CRO nerds like me 😉

🧠 How I Choose the Right Popup A/B Testing Tool

I don’t believe in a universal “best” tool. Instead, I match the tool to the store’s reality.

Here’s how I usually decide:

Traffic Level

- Low traffic → simple A/B testing, longer test duration

- High traffic → faster iteration, more frequent tests

Testing Maturity

- Beginners → easy setup, clear reporting

- Advanced teams → deeper targeting and segmentation

What’s Being Tested

- Copy & CTA tests → lightweight tools work fine

- Timing & trigger tests → needs strong behavioral rules

- Design-heavy tests → visual editor quality matters more

⚠️ A Common Tool Mistake I See

One mistake I see over and over again is switching tools too quickly. A test doesn’t deliver a big win, so the tool gets blamed. In my experience, the issue is almost always the testing strategy, not the software.

A clear hypothesis and a clean setup with a “good enough” tool will outperform a messy experiment on the most advanced platform every time.

⚠️ Common A/B Testing Mistakes I See on Shopify Popups

I’ve made some of these mistakes myself early on, and I still see them happen all the time. The frustrating part? Most of them have nothing to do with tools or traffic. They come from how the test is structured. If you avoid the mistakes below, your popup A/B tests on Shopify will already be ahead of the curve.

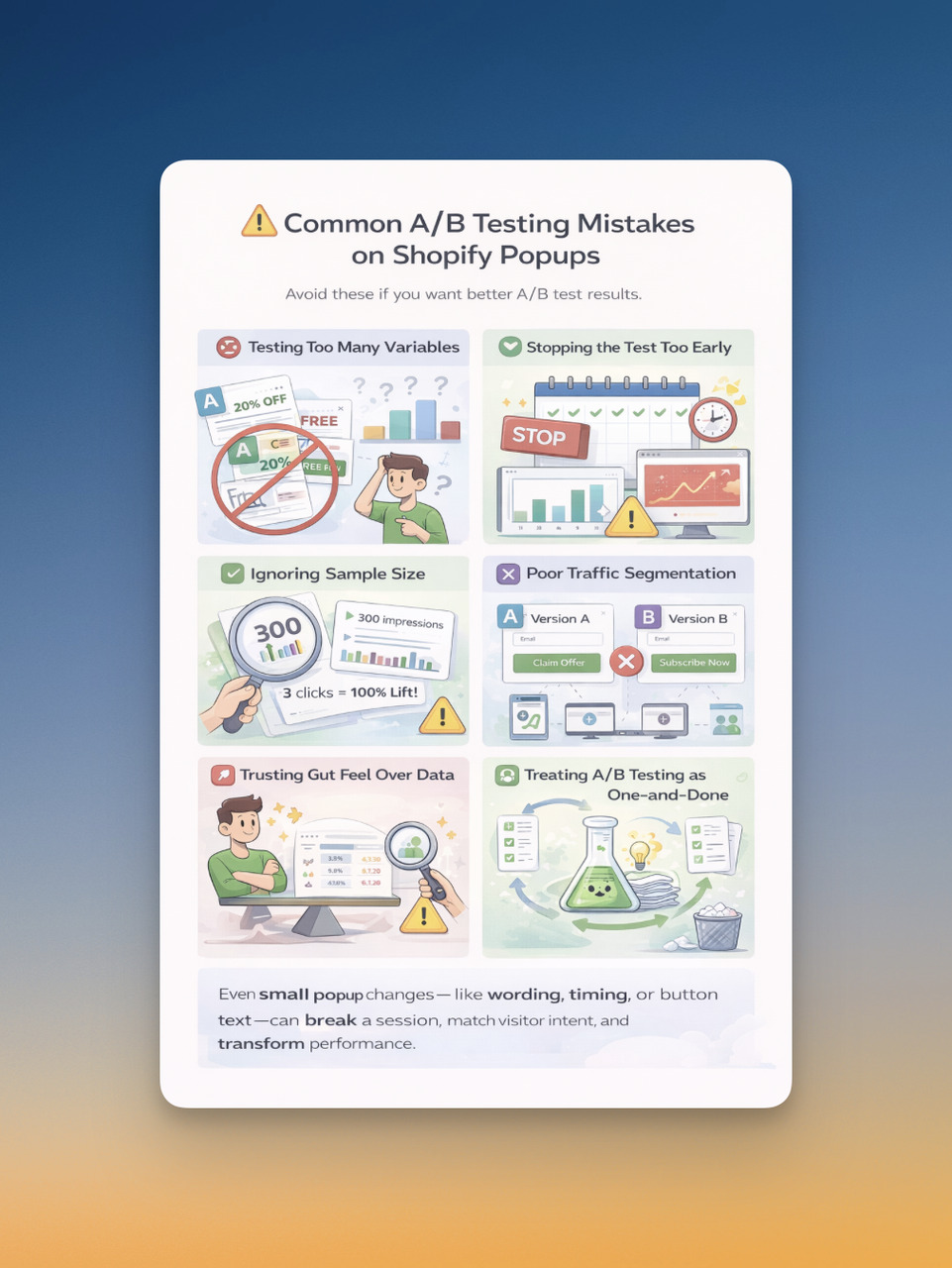

🚫 Testing Too Many Variables at Once

This is the most common mistake and the fastest way to learn nothing.

I’ll often see tests like:

- New headline

- New CTA

- New offer

- New design

…all launched at the same time.

When conversion rate changes, you have no idea why.

What I do instead:

- One test = one variable

- Headline test first

- CTA second

- Offer third

- Design last

If I can’t explain the result in one sentence, the test was too complex.

⏹️ Stopping the Test Too Early

I get the temptation. Version B looks amazing after one day. Someone gets excited. The test gets stopped.

That’s usually a mistake.

Early data is volatile, especially with popups. In my experience, day-one winners often lose by day seven.

My rule of thumb:

- Never stop before 7 days

- Prefer 14 days

- Always include weekends

If traffic is low, patience matters more than precision.

📉 Ignoring Sample Size and Context

A popup going from:

- 2 conversions → 4 conversions

might look like a 100% lift, but that doesn’t mean much.

I always ask:

- How many total impressions?

- How many total conversions?

- Was traffic stable during the test?

If numbers are too small, I treat the result as a directional signal, not a final decision.

🎯 Not Segmenting Traffic Properly

This one is subtle but dangerous.

If:

- Version A shows on mobile

- Version B shows mostly on desktop

You’re not A/B testing anymore.

I always double-check that:

- Device targeting is identical

- Page targeting is identical

- Audience rules are identical

Same users, same context; only one change.

🧠 Trusting Gut Feel Over Data

This hurts to admit, but it happens.

I’ve personally liked Version B more than Version A—better copy, cleaner design—only to see Version A win clearly. When that happens, I go with the data every time.

What I’ve learned:

- You are not your average visitor

- “Feels better” ≠ converts better

- Data beats taste, always

That doesn’t mean creativity doesn’t matter—it just means it needs validation.

🧪 Treating A/B Testing as a One-Off Task

Another mistake I see is running one A/B test and calling it optimization.

Real results come from iteration.

Here’s how I think about it:

Every test—win or lose—should inform the next one.

📊 Case Studies: Real Shopify Popup A/B Test Results

As you asked, I’m only including case studies backed by real data you can verify. Popup-specific A/B test case studies with measurable numbers are rare in public sources, but the two below are directly relevant to popup experimentation and conversion lift, and both include real figures you can check.

🧪 Case Study 1: Popup Variation Converts Better (Simple A/B Test)

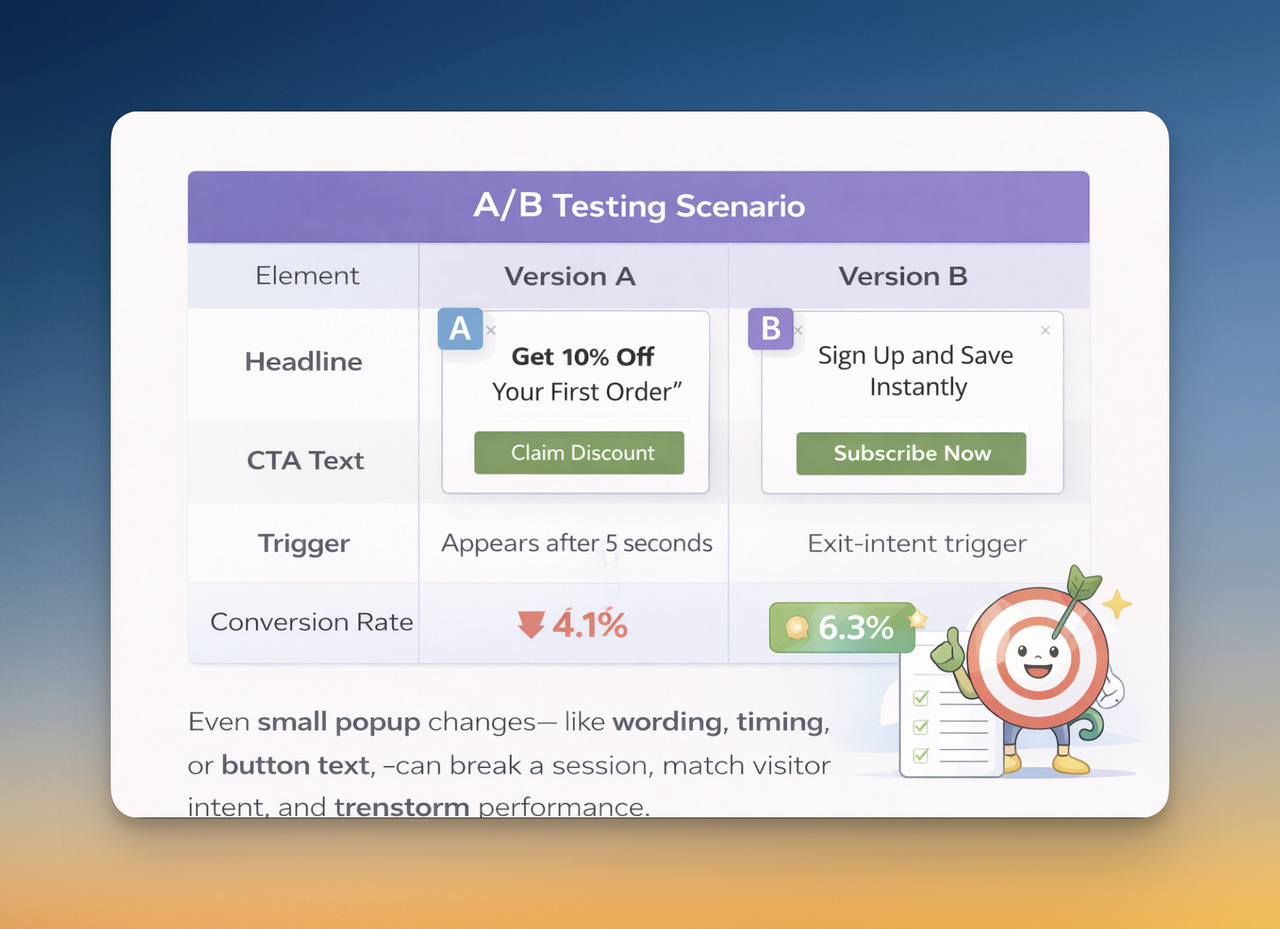

In a breakdown of A/B testing for popups, one real example compared two versions of a popup:

In this example, Version B outperformed Version A by a significant margin; going from a 4.1% conversion rate to 6.3%. This kind of result is exactly what we’re aiming for when we A/B test Shopify popups: a measurable difference that tells us which experience resonates better with visitors.

What this teaches us:

- Changing timing and wording together boosted performance

- Exit-intent triggers often catch visitors before they leave

- Clear, benefit-focused CTAs tend to perform better

This isn’t a Shopify-store–specific case, but in my experience most Shopify popup builders (like Popupsmart, OptiMonk, etc.) let you replicate this exact kind of test on your own store, and you often see similar lifts if you define your hypothesis well.

📈 Case Study 2: Fullscreen Popups vs Lightbox (Email & SMS Conversion Lift)

A large aggregated A/B test dataset from Recart (based on over 600 popup experiments across ecommerce sites) shows real conversion improvements when testing popup formats:

- Fullscreen popups lifted email capture conversion by 23.6% compared with traditional lightbox popups

- SMS capture rates improved by 21.6% with fullscreen vs lightbox

- Purchase conversion also increased modestly (1–7%) when fullscreen popups were used strategically rather than intrusive ones

What this teaches us:

- Format and presentation matter a lot — not just copy

- More attention on the popup (fullscreen) often means more conversions

- But subtlety still matters — the popup must feel legitimate rather than interruptive

📌 How I Use These Case Studies in Practice

In my own A/B tests on Shopify store popups, I take two lessons from these real examples:

- Don’t underestimate timing and trigger changes. Something as simple as changing from a time-delay trigger to exit-intent can yield measurable lifts, as the popupsmart example shows.

- Popup format alone can impact conversion performance. Fullscreen variants often outperform traditional lightbox styles, especially for email and SMS captures, as the Recart data suggests.

Both of these insights have helped me create better A/B tests and avoid tests that waste traffic without meaningful learning.

🏁 Conclusion: Why A/B Testing Turns Shopify Popups into Intentional Experiences

At the end of the day, A/B testing Shopify popups isn’t really about optimization tools or conversion rate graphs. It’s about replacing assumptions with understanding. When you test different messages, offers, or triggers, you’re not just trying to “win” a test; you’re learning how your visitors think, what they respond to, and where friction actually exists.

Across real popup experiments, whether it’s a headline change, a timing adjustment, or a different incentive, the outcome is usually the same: clearer communication, fewer ignored popups, and more intentional engagement. Well-tested popups don’t feel louder or more aggressive. They feel more relevant. They answer a question the visitor already has instead of interrupting their experience.

Good on-site marketing doesn’t come from guessing better. It comes from listening better.

A/B testing is how Shopify popups start doing exactly that.

Final Thought ✨

Optimization doesn’t require big redesigns or complex systems. Sometimes, the most meaningful improvement comes from changing one small thing, and letting real users show you whether it worked.

And A/B testing is where that process begins.

🚀 Ready to Improve Your Shopify Popups with Confidence?

Start with one popup.

Test one variable.

Run it properly.

Learn from it.

That’s how popups stop being static elements and start becoming a real growth channel.

❓ Frequently Asked Questions

What should I A/B test first on a Shopify popup?

Start with the headline or main copy. It usually has the biggest impact on conversion rate.

How long should an A/B test run?

At least 7 days. Ideally 14 days to account for different traffic patterns.

Do I need high traffic to A/B test popups?

No. Low-traffic stores can still run useful tests by changing one variable and running tests longer.

Can I A/B test Shopify popups without an app?

It’s possible, but difficult. Most proper A/B tests require a tool that handles traffic splitting and tracking.

Is A/B testing popups bad for user experience?

No. When done correctly, it usually improves user experience by reducing friction and irrelevance.